Table of contents

- Kunal kushwaha's Inspiring Opening Remarks at the Event

- Talk 1: DevOps Vs MLOps

- Why not SDLC ?

- SDLC to DevOps

- Talk 2: GitOps

- Talk 3: Containerizing When & How?

- What are Containers?

- Container Vs Virtual Machines: How they are different

- Hypervisor

- Structure of Containers

- Profitability

- How Docker helps with this

- Isolation

- Scalability

- Efficiency

- Disadvantages of Containers

- Security risk

- Orchestration complexity

- Limited resource organization

- Orchestration with Kubernetes

- Container Registries

- Container Monitoring/Logging

- Automation Tools

- An Amazing Panel Discussion😁

- 🎉Closing Ceremony🎉

The WeMakeDevs Delhi Meetup was an exciting and informative event that provided attendees with valuable insights into the world of DevOps and open source. As someone who attended this meetup, I was able to gain a deeper understanding of the latest trends, best practices, and technologies in this rapidly evolving field. In this blog, I will share my personal experience at the WeMakeDevs Delhi Meetup and the key learnings that I took away from this event. From the insightful talks and discussions to the networking opportunities, this meetup was a valuable experience for anyone interested in the world of DevOps and open source. So, let's dive in and explore the highlights of the WeMakeDevs Delhi Meetup!

Kunal kushwaha's Inspiring Opening Remarks at the Event

Kunal Kushwaha, a well-known DevOps enthusiast and YouTuber, provided the opening remarks for the WeMakeDevs Delhi Meetup. Although Kunal was unable to attend the event in person, he addressed the audience virtually through a video.

Kunal began his remarks by reminding the audience about the importance of maintaining a code of conduct and respectful behavior throughout the event. He encouraged attendees to engage in constructive and collaborative discussions, and to approach the event with an open mind and willingness to learn.

Kunal also shared an exciting surprise with the attendees. This offer was met with great enthusiasm from the attendees, who were excited to have the opportunity to further develop their DevOps skills and knowledge.

Overall, Kunal's opening remarks provided a positive and inspiring start to the WeMakeDevs Delhi Meetup and set the tone for a productive and engaging event. Attendees were grateful for his contribution and insights and appreciated his efforts to create a supportive and inclusive environment for all participants.

Talk 1: DevOps Vs MLOps

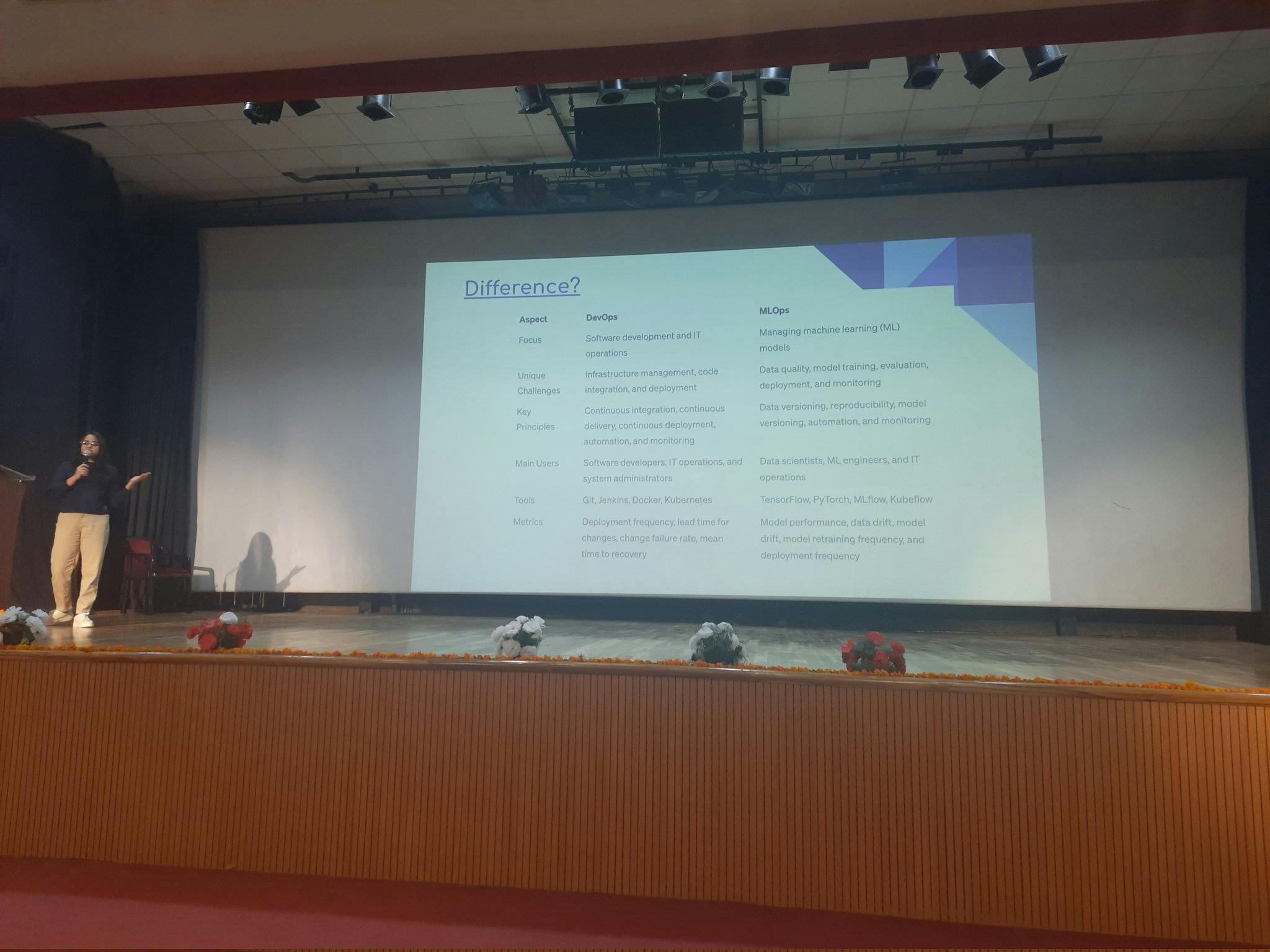

One of the highlights of the WeMakeDevs Event was the informative and thought-provoking talk on "DevOps vs MLOps". The speaker delved into the key differences between DevOps and MLOps, highlighting the unique challenges and considerations involved in managing machine learning (ML) workflows.

The speaker explained that while DevOps focuses on the automation and collaboration between developers and IT operations teams, MLOps is a specialized field that focuses on managing the lifecycle of ML models. This includes tasks such as data preparation, model training, deployment, and monitoring.

The speaker also emphasized the importance of incorporating ML workflows into existing DevOps processes, rather than treating them as separate entities. This can help organizations to effectively manage and scale their ML workflows, while also ensuring that they align with the broader goals of the organization.

Overall, the talk on "DevOps vs MLOps" was a fascinating exploration of the intersection between these two important fields. It highlighted the unique challenges and opportunities involved in managing ML workflows, and provided attendees with valuable insights into how to effectively integrate MLOps into their existing DevOps processes.

Following the talk on "DevOps vs MLOps," the speakers generously shared a free e-book with the attendees, which covered a range of topics related to MLOps. This was a fantastic resource that provided practical tips and insights into managing machine learning workflows.

In addition to sharing the e-book, the speakers also gave attendees a sneak peek into their upcoming event

Why not SDLC ?

After the engaging discussion of DevOps vs MLOps, the speakers prompted an interesting question - "Why not SDLC?" They went on to discuss the traditional Software Development Lifecycle (SDLC) and its challenges in the modern era of software development.

The speakers pointed out that the SDLC model is sequential and rigid, which can lead to delays in the development process. They explained how the traditional approach focuses on long-term planning, documentation, and testing, which can lead to slower feedback loops and make it difficult to keep up with the rapidly changing needs of customers and the market.

Furthermore, the speakers emphasized that SDLC does not fully address the complexity and speed of modern software development. They suggested that a more agile and flexible approach, such as DevOps or MLOps, can provide a more efficient and effective way to deliver software.

Overall, the speakers' insights on the limitations of SDLC were thought-provoking and highlighted the need for more adaptive and collaborative approaches to software development.

SDLC to DevOps

The speakers then talked about the evolution of software development methodologies from SDLC to DevOps. They explained how DevOps is a natural progression from SDLC and how it addresses the challenges of traditional software development.

They highlighted that DevOps focuses on creating a culture of collaboration and communication between development and operations teams, which can lead to faster feedback loops, reduced time-to-market, and higher-quality software. Additionally, the speakers discussed how DevOps emphasizes automation and continuous improvement, which can help teams quickly adapt to changing requirements and technologies.

The speakers also talked about how DevOps is not just a set of tools, but rather a mindset and a culture that promotes continuous learning and experimentation. They suggested that the key to successful DevOps adoption is to focus on people, processes, and technology in a balanced way.

Overall, the speakers' discussion of SDLC to DevOps provided valuable insights into the evolution of software development methodologies and highlighted the benefits of adopting a more collaborative, agile, and iterative approach to software delivery

Talk 2: GitOps

Introduction

At the event, the speakers also featured a talk on "GitOps," which is a relatively new methodology that has gained popularity in the DevOps community in recent years. GitOps is a way of managing infrastructure and applications using Git as the single source of truth. It emphasizes the use of version control, automated workflows, and declarative configuration to manage the entire software delivery lifecycle.

Why GitOps?

The speaker explained that GitOps provides a number of benefits over traditional DevOps methodologies. One key advantage is that it enables teams to manage their infrastructure and applications as code, which makes it easier to track changes, collaborate, and maintain consistency across environments. By using Git as the single source of truth, teams can ensure that changes are reviewed and approved through a pull request process, and that deployments are automated and auditable.

Another benefit of GitOps is that it enables teams to implement continuous delivery practices more effectively. By using automated workflows and declarative configuration, teams can ensure that deployments are consistent and repeatable across environments. This can help to reduce the risk of errors and downtime, while also enabling teams to move faster and with more confidence.

GitOps Vs DevOps

The speaker also highlighted some key differences between GitOps and traditional DevOps methodologies. While DevOps emphasizes the collaboration between developers and operations teams, GitOps is more focused on using Git as the single source of truth to manage infrastructure and applications. GitOps also emphasizes the use of automated workflows and declarative configuration, whereas traditional DevOps methodologies may rely more on ad hoc scripts and manual processes.

Despite these differences, the speaker emphasized that GitOps is complementary to DevOps, and can be used to enhance and streamline existing DevOps practices. By adopting GitOps, teams can gain greater visibility and control over their infrastructure and applications, and reduce the risk of errors and downtime.

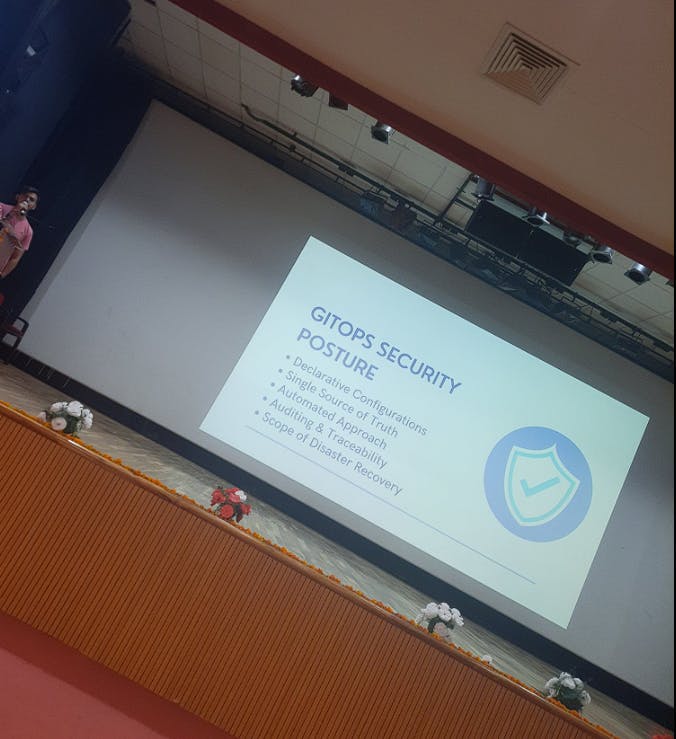

GitOps Security Postures

The speaker also discussed some key security considerations when implementing GitOps. One potential risk is that the credentials used to access Git repositories and deploy changes could be compromised. To mitigate this risk, the speaker recommended using Git hosting services that support encryption and two-factor authentication.

Another security consideration is ensuring that the changes being made through GitOps are auditable and can be traced back to specific individuals. To achieve this, the speaker recommended using pull requests and other collaborative workflows that require review and approval before changes are merged and deployed.

Overall, the talk on GitOps provided a comprehensive overview of this methodology, highlighting its benefits, differences from traditional DevOps, and key security considerations. It was a valuable resource for attendees who were interested in learning more about this emerging approach to managing infrastructure and applications.

Talk 3: Containerizing When & How?

What are Containers?

The talk on "Containerizing When & How?" provided a detailed overview of containers and how they can be used to manage applications in a more efficient and scalable way. Containers are lightweight and portable software packages that can run applications and services in a self-contained and isolated environment.

Container Vs Virtual Machines: How they are different

The speaker highlighted the key differences between containers and virtual machines (VMs). While VMs run multiple operating systems on a single physical machine, containers share a single operating system and use the host machine's kernel to run applications. This makes containers more lightweight and efficient than VMs, as they require fewer resources to operate.

Hypervisor

The speaker also explained the role of the hypervisor, which is a software layer that enables VMs to run on a physical machine. In contrast, containers do not require a hypervisor, as they run directly on the host machine's kernel. This makes containers faster and more efficient than VMs, as they do not have the overhead of the hypervisor layer.

Structure of Containers

The speaker also discussed the structure of containers and how they are designed to be modular and scalable. Containers are composed of multiple layers, which include the application code, libraries, dependencies, and the operating system. These layers can be shared across multiple containers, which makes them more efficient and scalable than traditional application deployment models.

Profitability

The speaker also highlighted the profitability of containerization, explaining that it can provide a number of benefits for organizations that are looking to scale their applications and infrastructure. Containerization enables teams to manage applications more efficiently, reduce costs, and increase agility. By using containers, teams can easily deploy and manage applications across different environments, without worrying about compatibility or dependencies.

Overall, the talk on "Containerizing When & How?" provided a comprehensive introduction to containers and their benefits. The speaker's explanations and examples helped attendees understand how containers can be used to manage applications in a more efficient and scalable way, and the talk provided a valuable resource for anyone interested in learning more about containerization.

How Docker helps with this

During the talk on "Containerizing When & How?", the speakers emphasized the importance of using a reliable containerization platform like Docker to help manage application infrastructure more efficiently. They noted that Docker provides an easy-to-use interface for creating, managing, and deploying containers, which makes it easier for teams to package applications and dependencies into a single container image.

According to the speakers, Docker also provides a number of tools for managing and scaling containers, such as Docker Compose and Docker Swarm. These tools make it easier to deploy and manage containers at scale, and provide built-in features for monitoring, scaling, and updating containers as needed.

Isolation

The speakers also emphasized the importance of containerization for providing isolation between different applications running on the same host machine. They noted that Docker provides isolation by using containerization technology built into the Linux kernel, which allows multiple containers to run on a single host machine without sharing the same kernel.

Each container is isolated from other containers running on the same machine, and has its own file system, network stack, and process space. This makes it easier to manage dependencies and avoid conflicts between applications, and also provides a layer of security by limiting the attack surface for each application.

Scalability

According to the speakers, one of the biggest benefits of containerization is scalability. They noted that containers can be easily scaled horizontally by spinning up multiple instances of the same container image, which makes it easier to handle spikes in traffic or demand.

The speakers also highlighted the importance of using tools like Docker Swarm for managing and orchestrating containers across multiple hosts. By using containerization and cloud-native architectures, teams can easily deploy applications to cloud platforms like AWS, Azure, or Google Cloud Platform and scale them horizontally based on demand.

Efficiency

Finally, the speakers discussed the efficiency benefits of containerization. They noted that Docker images are typically smaller than VM images, which means they can be downloaded and deployed more quickly. Containers also provide a high degree of flexibility, as they can be easily moved between different environments, such as development, testing, and production.

Overall, the speakers emphasized that containerization provides a number of benefits for managing applications in a more efficient, scalable, and secure way. By using Docker and containerization technology, teams can take advantage of these benefits and more easily manage their applications and infrastructure at scale.

Disadvantages of Containers

While containerization offers many benefits for managing application infrastructure, there are also some potential downsides to be aware of. During the "Containerizing When & How?" talk, the speakers discussed several disadvantages of containers that teams should be aware of.

Security risk

The speakers noted that one of the potential downsides of containerization is that it can increase the security risk of an application. Since containers share the same host operating system kernel, any vulnerabilities in the kernel can potentially impact all containers running on the same host. Additionally, containers can be vulnerable to attacks such as privilege escalation and container breakout.

To mitigate these risks, the speakers recommended using best practices for container security, such as running containers with minimal privileges, limiting network access, and using tools like image scanning and runtime protection to detect and prevent security threats.

Orchestration complexity

Another disadvantage of containerization is the complexity involved in orchestrating containers. While tools like Docker Swarm and Kubernetes can make it easier to manage and deploy containers at scale, these tools can also add significant complexity to an application stack.

The speakers advised teams to carefully consider their needs and skill sets when choosing an orchestration tool and to invest in specialized expertise and resources as needed. They also recommended using automation tools and strategies to simplify container management and reduce the risk of human error.

Limited resource organization

Finally, the speakers noted that containers can sometimes lead to resource limitations, particularly when multiple containers are running on the same host. Since containers share the same resources, such as CPU and memory, they can compete for these resources and lead to performance issues if not managed carefully.

To address this challenge, the speakers recommended using tools like Kubernetes or Docker Swarm to manage resource allocation and scaling, and to use container monitoring tools to detect and address performance issues as they arise.

Overall, the speakers emphasized that containerization provides many benefits for managing application infrastructure, but also requires careful consideration and management to mitigate potential risks and downsides. By taking a proactive approach to container security, orchestration complexity, and resource allocation, teams can take advantage of the benefits of containerization while minimizing potential downsides.

Orchestration with Kubernetes

The speakers noted that Kubernetes is one of the most popular container orchestration tools available today, and offers many benefits for managing containerized applications. Kubernetes provides a robust set of features for managing containers at scale, including automatic scaling, load balancing, and rolling updates.

The speakers recommended that teams consider using Kubernetes for managing containerized applications, and invest in specialized expertise and resources as needed to manage Kubernetes effectively.

Container Registries

Another important aspect of containerization is managing container images and their dependencies. Container registries provide a centralized location for storing and distributing container images, and can help teams manage dependencies more effectively.

The speakers recommended using a container registry like Docker Hub or Google Container Registry to manage container images, and noted that many cloud providers also offer their own container registries.

Container Monitoring/Logging

The speakers also emphasized the importance of monitoring containerized applications to ensure they are running smoothly and identify issues quickly when they arise. Container monitoring tools can provide real-time insights into container performance, resource utilization, and application health.

The speakers recommended using a tool like Prometheus or Datadog for container monitoring and logging, and noted that many cloud providers also offer their own monitoring and logging tools.

Automation Tools

Finally, the speakers noted that automation is critical for managing containerized applications at scale. Automation tools can help teams manage container deployments, scaling, and updates more efficiently and reduce the risk of human error.

The speakers recommended using automation tools like Jenkins, GitLab CI/CD, or CircleCI to automate the containerization process and simplify container management.

Overall, the speakers emphasized that containerization requires careful consideration and management to take full advantage of its benefits. By using orchestration tools like Kubernetes, container registries, monitoring/logging tools, and automation tools, teams can simplify container management and ensure their containerized applications are running smoothly and efficiently.

An Amazing Panel Discussion😁

The panel discussion at the WeMakeDevs Meetup featured four prominent speakers in the DevOps and open-source community: Siddhant Khisty, Aakansha Priya, Bhavya Sachdeva, and Rakshit Gondwal.

The discussion focused on the importance of open source in the DevOps world and the challenges and opportunities that come with working in this space. The panelists shared their insights and experiences with the audience, discussing topics like the benefits of open source, how to get involved in open source projects, and how to overcome common challenges like maintaining code quality and ensuring project sustainability.

The discussion then shifted to the topic of public speaking skills, with the panelists sharing their own experiences and offering tips and strategies for improving communication and presentation skills.

Overall, the panel discussion was an engaging and informative session that provided attendees with valuable insights and perspectives on open source and public speaking in the DevOps community. Attendees had the opportunity to ask questions and participate in the discussion, which helped to create a collaborative and supportive atmosphere for learning and growth.

🎉Closing Ceremony🎉

The closing ceremony of the WeMakeDevs Meetup was a great way to wrap up the event. Attendees had the opportunity to take group photos, which helped to capture the memories of the day and create a sense of community among the attendees.

In addition, attendees received some awesome swag as a token of appreciation for their participation in the event. The swag included t-shirts, stickers, and other items related to DevOps and open source, which attendees could take home as a reminder of the event.

Finally, the networking session at the end of the event was a great opportunity for attendees to connect with like-minded individuals and discuss their shared interests in DevOps and open source. Attendees could exchange ideas, ask questions, and share their own experiences, which helped to build a sense of community and collaboration among the attendees.

Overall, the closing ceremony was a great way to end the event and helped to reinforce the sense of community and collaboration that had been built throughout the day.